Table of Contents

Intelligence is often referred to as the ability to learn and solve complex problems. The term AI refers to the ability of machines to think and act intelligently. Due to the lack of a clear definition of intelligence, many of us overestimate what AI is capable of and what exactly makes it intelligent. AI includes robots, computers and machines that can learn and think on their own. They help humans solve complex problems, which otherwise requires writing a lot of coding and development work.

The main purpose of creating such systems is to improve functions like learning, reasoning and problem solving. These functions are intangible, related to knowledge and mainly composed of learning, reasoning, perception, problem solving and linguistic intelligence.

The ultimate goal of research and development in the field of AI is to develop machines that have human-like capabilities and can perform natural tasks like language processing and reasoning, learning, and making decisions.

These algorithm-powered systems use different techniques to achieve their goals, including rules, deep learning, and machine learning. These algorithms feed information/data to the AI, that uses it to learn and get better at tasks progressively, without the developers having to code for everything.

The long-term goals of creating intelligent AI-based systems is to design machines that have the ability to think and behave like a human and can carry out natural tasks such as language understanding and vision. Classical algorithmic software is not designed to address natural tasks as they are not coded to manipulate symbolic information.

AI is pluri-disciplinary, which refers to extensively using different types of knowledge related to a specific domain, including knowledge acquisition, use and representation. Although AI is strongly related to computer sciences, depending on the project, it also involves other fields of knowledge such as cognitive sciences, logic, and linguistics. Its key domains of activity include:

Proving Theorems: Although no machine has been able to prove a theorem so far, research in this particular area is still important because it deals with the core aspects of reasoning and can lead to a breakthrough in the field.

Speech Recognition and Understanding: Involves decoding speech signals and processing large vocabulary. We already have many commercially available products such as dictation and transcription software.

Natural Language Processing: NLP is similar to speech recognition, but it covers many activities related to interpretation and generation of sentences. NLP requires large bodies of knowledge in order to understand and process language.

Expert Systems: Designed to make intelligent decisions based on the information or knowledge available to them. These systems can make decisions independently or in close human interaction.

Vision and Image Interpretation: In its early stages it included image processing and pattern recognition, while more advanced functions include image interpretation and guidance of robots. Knowledge-based reasoning is mandatory in order to understand and process an image/scene.

Robots: Refers to machines that can repeat certain sequences of movements. The term was used for mechanical machines in the past. However, modern robots also incorporate AI for reasoning (to determine an appropriate action) and perception (vision, hearing).

What is Intelligence?

There are a number of ways to define intelligence, including self-awareness, capacity of logic, understanding, learning ability, critical thinking, etc. There are also several types of intelligence like academic and social intelligence. That’s why defining intelligence is complex and may lead to disagreements. In general, being intelligent is thought of as having an above-normal thinking capacity. It varies from one person to another and is expressed in their behavior.

Intelligence in context of AI can be defined by using four historical approaches, i.e., thinking humanly, thinking rationally, acting humanly, and acting rationally[i]. The first two approaches are about reasoning and the thought process, while the other two are about behavior. The main objective of AI systems is to be able to seamlessly carry out tasks that usually require human intelligence.

Humans think emotionally, rationally, and abstractly and because of this can act creatively, something AI cannot do. AI systems are good at recognizing patterns, making connections between information and distinguishing different objects, but they are still far from being able to ‘think’ and act emotionally and abstractly.

Discerning Intelligence, What Makes a System Intelligent?

AI machines that have the ability to learn from experiences and examples, can understand language, recognize objects, solve problems, and make decisions on their own are considered to be ‘intelligent’[ii]. The abilities of AI can range from simple problem solving to language processing, motion and perception and social intelligence. However, the level of intelligence AI has achieved is still limited and they are far off from their claims of super intelligence.

Many researchers argue that recognizing patterns, solving problems, and making decisions based on the input data is not true intelligence. Today’s AI systems are able to stimulate only a part of human intelligence. That’s the reason today’s AI is still considered to be a supportive technology rather than being something that can take over our planet. The main difference between AI and traditional software is advanced algorithms that give the AI its narrow intelligence.

Understanding the Basics of AI

AI combines large amounts of data with intelligent algorithms and fast processing in order to learn from patterns, build analytical models, and find hidden insights, without developers having to program the system for every specific action. An AI system has three main components: hardware, algorithm, and data[iii]. Advancements in these three areas have helped AI rapidly advance and become part of our everyday lives.

Hardware

Computer hardware, including CPUs, GPUs, memory, and storage have improved significantly in the last two decades. Today, anyone can train a basic machine learning model on a desktop computer, which previously required a dedicated supercomputer.

Modern GPUs by NVIDIA and AMD have enabled researchers to perform parallel computations at a much faster rate than ever before. What took weeks to complete now just takes a few hours. Large organizations use clusters of modern GPUs to train their deep learning models, improve performance, and reduce data center costs.

In addition to faster GPUs and CPUs, high-speed networks, and special-purpose chips such as Google’s TPU have accelerated the development process. High-speed networks with adequate bandwidth allow researchers to truly utilize the potential of AI when calculating complex data models and training the system. Although chips designed specifically for AI are not so common, they are designed to perform faster than general purpose hardware.

Algorithm Framework and Algorithms

The algorithm framework is the second layer in the AI infrastructure on top of which the algorithm runs. Google’s TensorFlow is one example of an algorithm framework that allows implementing and running algorithms without requiring the user to heavily invest in their own infrastructure.

In the context of AI, an algorithm can be defined as a set of rules or instructions given to an AI program to help it understand data and learn on its own. The algorithm layer sits on top of the framework and teaches the system how to learn, understand, and perform different tasks. AI and algorithms are inseparable and well-constructed algorithms are the backbone of a good AI.

Data

Algorithms feed on data to self-learn and need huge amounts of it to be effective. The internet and Internet of Things have made the data available to feed machine learning and realize the true potential of AI. Advanced algorithms and the data gold mine provide a unique competitive advantage to businesses that are willing to invest in the technology. With about 2.2-billion gigabytes of new data generated every day, businesses need highly diverse data sources to feed AI systems in order to find patterns, learn, and deliver the desired results.

Types of Artificial Intelligence (By Capability)

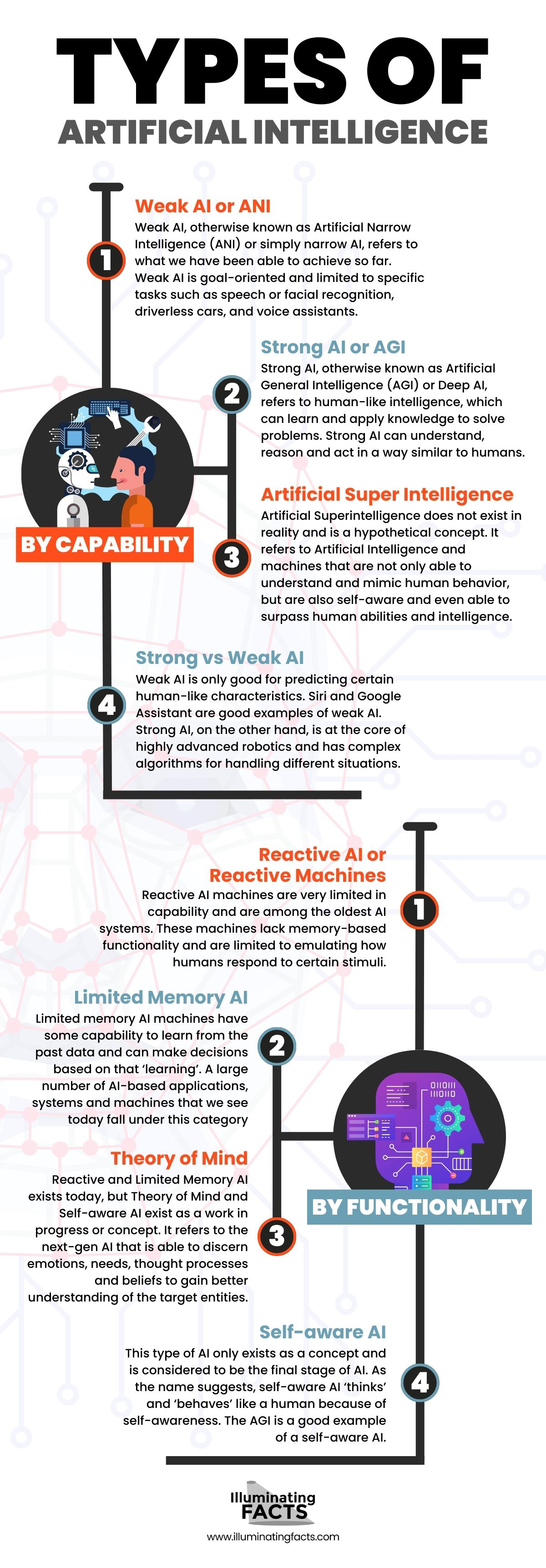

Although AI can be subdivided into many types, it can be categorized by capability and capacity into three main categories[iv].

Weak AI or ANI

Weak AI, otherwise known as Artificial Narrow Intelligence (ANI) or simply narrow AI, refers to what we have been able to achieve so far. Weak AI is goal-oriented and limited to specific tasks such as speech or facial recognition, driverless cars, and voice assistants[v]. ANI is designed to intelligently complete singular tasks and although it might not seem very smart, ANI works very intelligently under the finely defined set of constraints its programmer provided.

This type of intelligence is not yet able to truly replicate human intelligence and can only stimulate human behavior to some extent based on the context and defined parameters. For example, recommendation engines suggest information to the user based on their history and can only learn and complete this specific task.

Recent advancements in deep learning and machine learning in the last decade have led to major breakthroughs in weak AI. Today’s ANI applications range from systems that can diagnose diseases such as cancer with high accuracy to personal digital assistants. The intelligence of ANI mainly comes from NLP (Natural Language Processing), which is programmed to communicate with humans in a personalized manner.

Weak AI can either have a limited memory or be reactive. Reactive AI systems are considerably basic, designed to emulate the abilities of human minds in a narrow sense and respond to various stimuli. These systems have almost no data storage capabilities and memory. Limited memory AI systems on the other hand are equipped with additional learning and data storage capabilities, which enables these systems to make their own decisions based on the historical data.

Today’s AI is mostly limited-memory AI, which refers to use of large data sets for deep learning. Some popular examples of weak AI include:

- IBM Watson

- Siri, Alexa, Cortana, and Google Assistant

- Google’s Rankbrain

- Facial and image recognition software

- Self-driving cars

- Content recommendation engines that are mainly based on user history

- Disease prediction and mapping

- Spam filters

- Social media monitoring solutions

- Drone robots

Strong AI or AGI

Strong AI, otherwise known as Artificial General Intelligence (AGI) or Deep AI, refers to human-like intelligence, which can learn and apply knowledge to solve problems. Strong AI can understand, reason and act in a way similar to humans. However, we are not there yet and need to figure out a way to make AI machines conscious, which requires programming a complete set of cognitive 46abilities[vi]. In order to achieve strong AI, researchers have to take machine learning to a whole new level by applying experimental knowledge to a diverse range of problems.

AGI is based on the theory of mind, which gives them the ability to distinguish needs, beliefs, thoughts, and emotions. The theory of mind refers to training AI systems to understand humans, instead of just simulating or replicating like weak AI systems. Achieving AGI is an immense challenge because it tries to replicate general intelligence, true creativity, and fundamentally how a human brain works.

We are still far from completely understanding how the human brain works, so right now researchers are more focused on replicating basic brain functionalities like movement and sight. One prominent example of our attempt to achieve AGI is the Fujitsu K, which is a supercomputer that simulated a single-second neural activity in 40 minutes. Achievements like this are why scientists believe a true AGI is far off in the future, if it is possible at all. The human brain is comprised of billions of neurons, simulating such a thing would require computing power millions of times of what the world collectively has now.

ASI (Artificial Super Intelligence)

Artificial Superintelligence does not exist in reality and is a hypothetical concept. It refers to Artificial Intelligence and machines that are not only able to understand and mimic human behavior, but are also self-aware and even able to surpass human abilities and intelligence[vii]. It’s the type of AI most science fiction movies talk about such as robots taking over the earth. ASI is not just about emulating humans, they can theoretically have needs, emotions and desires, and form new beliefs.

It’s not just human intelligence that ASI can theoretically replicate. It is supposed to be better at almost everything else too, including science, mathematics, sports, medicine, art, and relationships. The ability to process information quickly and a much bigger memory make ASI machines potentially better than humans in solving problems and making decisions.

Despite the appealing potential of having such machines at our disposal, the consequences are unknown. The most popular possible consequence is, of course, our robot overlords taking over the world. ASI machines might come to realize the need for self-preservation, which puts a question mark on our own survival as a species.

Strong vs Weak AI

Weak AI is only good for predicting certain human-like characteristics. Siri and Google Assistant are good examples of weak AI. Strong AI, on the other hand, is at the core of highly advanced robotics and has complex algorithms for handling different situations. Strong AI machines are supposed to have a mind of their own and there have been advancements in the field, but we are still far from creating AI that is self-aware and able to make its own decisions.

The definition of strong and weak AI depends on who you are asking, which is why there is no concrete and clear definition of what’s a weak AI and what’s a strong AI. Most of the AI machines and programs today are based on weak AI, which processes input data and classify it accordingly. For example, Alexa understands words like ON and when you ask it to turn on the TV, it responds in a manner its programmed criteria can handle and execute.

Strong AI uses association and clustering to process data and does not classify information like weak AI machines do. The outcomes of strong AI machines can be unpredictable because these machines do not always rely on the information they already have and are not programmed for every task, so their own decisions might not be the most reliable.

Types of AI by Functionality

Despite being one of the most astounding and complex human creations, there is still a lot to be explored in the field of AI. The current advancements are considered to be just the tip of the iceberg. AI is having a revolutionary impact even in its early stages. This makes it difficult to gain a comprehensive perspective on what it can do in the future.

Its rapid growth and future potential have made a lot of people paranoid about AI taking over, while some believe that humans are close to maxing out its potential. The ultimate goal of research in the field of AI is to create machines that can think and function like a human. But as mentioned previously, we are still far from achieving that goal.

The more evolved an AI system is, the more human-like capabilities it should have. AI by functionality can be categorized by the degree to which AI machines compare to human intelligence and functioning. Understanding types of AI from different perspectives can help clear many doubts and provides a clearer picture of where we stand right now.

Reactive AI or Reactive Machines

Reactive AI machines are very limited in capability and are among the oldest AI systems. These machines lack memory-based functionality and are limited to emulating how humans respond to certain stimuli.

As a result, these machines cannot learn from the past data and are only suitable for responding to a narrow set of inputs. The Deep Blue, an AI machine by IBM is a good example of an AI machine that managed to beat chess grandmasters, but it was only good at one thing and operated under a well-defined set of instructions.

Limited Memory AI

Limited memory AI machines have some capability to learn from the past data and can make decisions based on that ‘learning’[viii]. A large number of AI-based applications, systems and machines that we see today fall under this category. AI systems that use deep learning are ‘trained’ using large sets of data that is stored in their memory to solve future problems. For example, image recognition software is trained by inputting a large number of images and labels to be able to name pictures itself.

Other software and machines such as chatbots, self-driving cars, and virtual assistants are some other examples of limited memory AI. Most of these AI systems are essentially reactive machines with a bit of memory. This memory allows them to make decisions based on the inputted data.

Theory of Mind

Reactive and Limited Memory AI exists today, but Theory of Mind and Self-aware AI exist as a work in progress or concept[ix]. It refers to the next-gen AI that is able to discern emotions, needs, thought processes and beliefs to gain better understanding of the target entities. Artificial Emotional Intelligence is one field where researchers are putting a lot of effort. However, the progress is still limited because further advancement is also needed in other AI areas and related fields.

Self-aware AI

This type of AI only exists as a concept and is considered to be the final stage of AI[x]. As the name suggests, self-aware AI ‘thinks’ and ‘behaves’ like a human because of self-awareness. The AGI is a good example of a self-aware AI.

It might take decades, if not centuries to materialize and remains the ultimate goal of AI researchers. What differentiates self-aware machines from Theory of Mind is that they can have their own desires, beliefs, needs and emotions, which is what many are paranoid about. The development of self-aware machines can give a boost to us as a civilization, but also have the potential of causing a catastrophe.

AI Technologies

Machine Learning

Machine learning is a subset of artificial intelligence and a data analysis methodology designed to automate analytical model building[xi]. The basic concept behind machine learning is that AI systems can learn from gathered data inputted into the system, identify different patterns, and make decisions on their own without human intervention. Machine learning focuses on training an AI system to learn and improve over time.

Machine learning started as simple pattern recognition. AI researchers exposed AI models to new data, allowing them to adapt independently. The science of machine learning has gained a lot of momentum recently and aims to produce repeatable, accurate and reliable results. Today’s Machine learning algorithms apply complex calculations to big data. Although the field is still in its initial stages, some notable examples include Google Car, online recommendation engines, and fraud detection systems.

The main reasons behind the popularity of machine learning include resurging interest in data mining and availability of large amounts of data, processing power, and inexpensive data storage. Machine Learning makes it possible to analyze complex and big data models faster and more accurately than traditional methods. There are many Machine Learning methods that AI researchers use including supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning.

Neural Networks

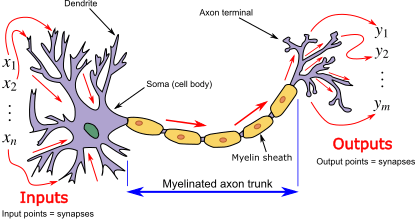

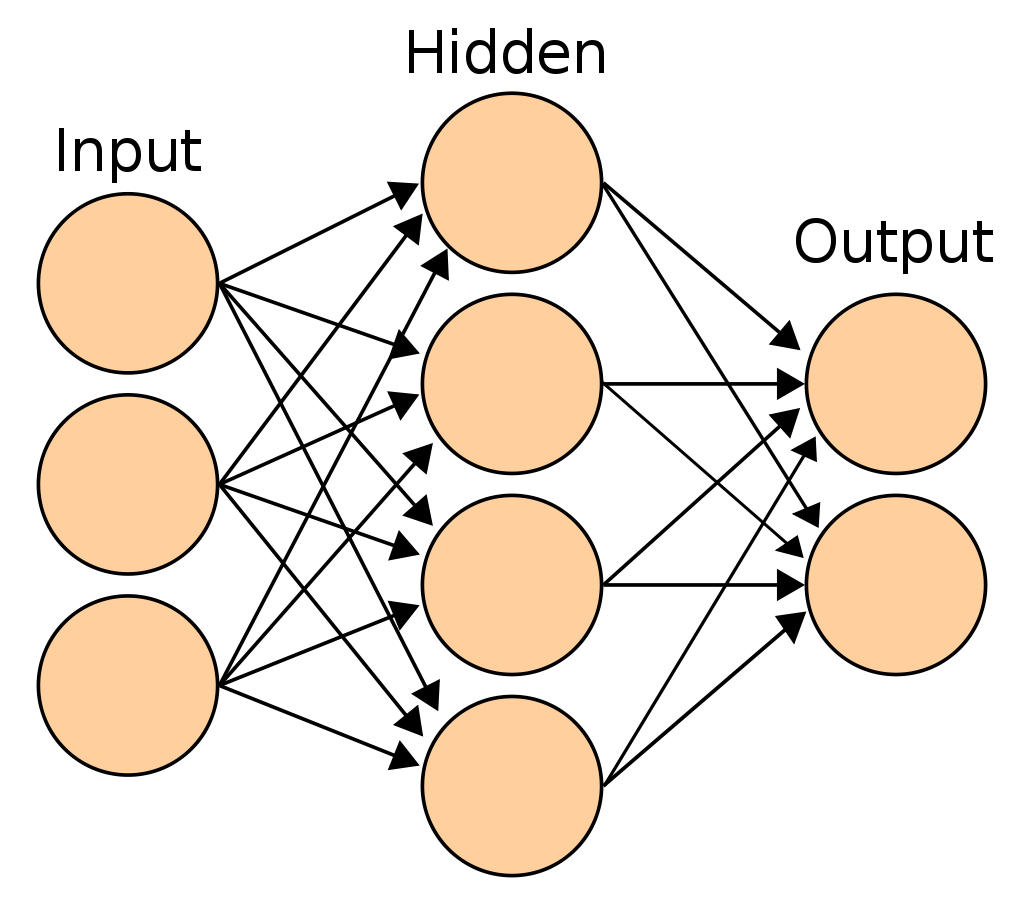

Neural networks refer to systems that are interconnected with each other through nodes and work similar to neurons in the brain[xii]. These systems are designed to recognize correlations and hidden patterns through algorithms and can classify and cluster information. These systems are programmed to improve continuously and learn over time.

Nodes are activated like neurons when an input or stimuli is applied, which spreads throughout the neural network. Neural networks receive input data and pass it on to the hidden layers, which pass on the processed information to the output layer.

As a result, the system creates a response or output. As signals travel across different layers, they get processed. Neural networks become ‘deeper’ by increasing the layers within their network. Deep learning AI uses hidden layers to take simple neural networks to the next stage.

The history of neural networks dates back to 1943. Warren McCulloch was the first to create a simple neural network based on how neurons work and used electrical circuits. His groundbreaking research paved the way for research in two key areas: brain’s biological processes and how neural networks can be applied to AI. Kunihiko Fukushima (1975) was the pioneer in developing multi-layered neural networks and aimed to create a computer that can solve tasks like a human brain.

The original goal of these systems was to develop a human brain like a computer, but with the passage of time the focus shifted to developing systems for specific tasks, which made them deviate from the biological approach[xiii]. Tasks such as speech recognition, computer vision, social network filtering, machine translation, medical diagnosis, and video games soon became the primary focus.

Deep learning systems at their core are neural networks, but with many layers, allowing mining of more data. Today’s neural networks are used to solve real-life, complex problems by learning and modeling nonlinear input-output relationships. The networks are used to reveal hidden relationships, make generalizations and inferences, predict, show patterns, and model data that is highly volatile such as financial time-series data. Neural networks have a lot of applications including:

- Medicare fraud detection

- Credit card fraud detection

- Robotic control systems

- Transportation and logistics optimization

- Natural language processing

- Disease diagnosis and other areas of medical science

- Financial predictions

- Energy management

- Quality control

- Chemical compound identification

- Evaluating ecosystems

There are several types of neural networks, each having its own set of advantages and disadvantages. CNNs (Convolutional Neural Networks) have five layers and each layer has its own purpose. RNNs (Recurrent Neural Networks) rely on sequential/dependent data and are mainly used for forecasting, sentiment analysis and other time-series applications.

FNNs (Feedforward Neural Networks) are systems in which information is connected from one layer to another forward layer. These systems do not have feedback loops and information can only flow in one direction: forward. ANNs (Autoencoder Neural Networks) are used to create encoders, which are abstractions created from a specific set of inputs. These systems are designed to model input on their own, which is why this method is regarded as unsupervised AI.

Computer Vision

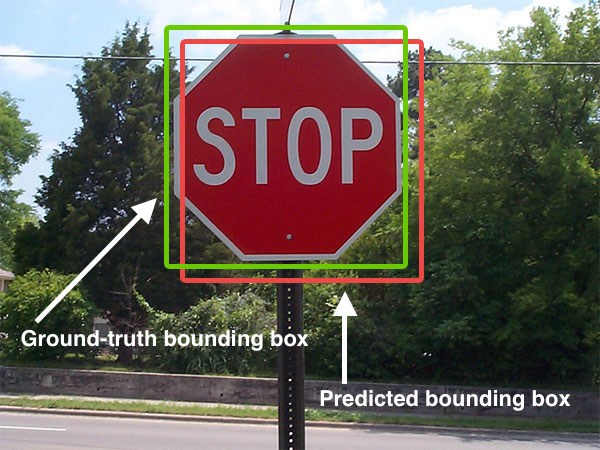

As the name suggests, computer vision is a sub domain of AI that deals with training computers to understand and interpret the visual world using input, which includes images and videos[xiv]. It uses deep learning models to identify and classify information accurately and react to what the computer ‘sees’.

Early experiments in the field began in the 1950s to detect edges of different objects and sort them into simple categories like squares and circles. With the popularity of the internet came large sets of images to deal with (1990s). Programs such as facial recognition applications became popular and were used to identify people in videos and photos.

Computers approach visual images like a jigsaw puzzle by assembling pieces into a complete image. Computer vision neural networks can distinguish pieces of images, identify edges, model components, and piece an image together like a jigsaw puzzle without being given the final image.

Instead, the systems are fed with a large number of related pictures in order to train them to recognize specific images and objects. Programmers and researchers feed the systems with millions of photos and videos, so they can learn on their own about different features of objects.

Some examples of modern applications of computer vision include training computers to distinguish between real and fake images, facial recognition, and automatic checkouts. The first step in the process is acquiring images after which the system processes and understands images/videos before outputting the final result. Today’s modern systems have taken the technology to the next level and are able to:

- Segment images

- Detect objects

- Recognize faces

- Detect edges

- Detect patterns

- Classify images

- Match features

Although most systems use one technique to solve a particular problem, some systems such as self-driving cars use more than one technique to achieve the desired results.

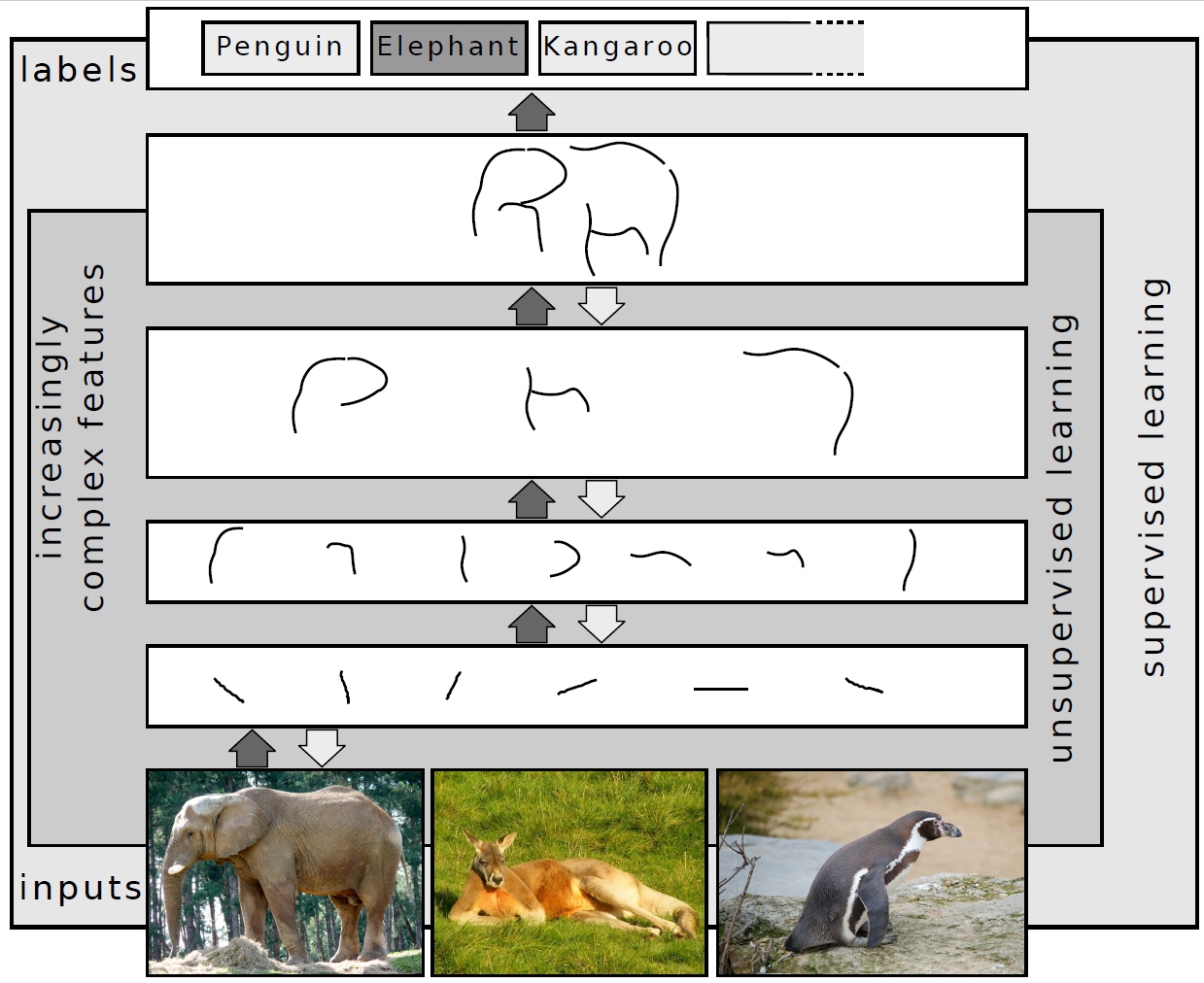

Deep Learning

Deep learning is one of the pillars of AI and a subset of Machine Learning. It consists of a neural network with at least three of more layers[xv]. It is used to train computers to perform different tasks like image identification, speech recognition, and forecasting. Deep learning algorithms train machines to learn and recognize patterns. Compared to traditional systems that organize data and run it through predefined equations, the main focus of deep learning is to ‘understand’ data using different layers of processing.

Apps such as Siri, Alexa and Google Assistant use deep learning to classify, recognize and describe content. The iterative nature of the technology means that a great deal of computational power is required. At the same time, constant improvement and adaptability of advanced deep learning algorithms presents an excellent opportunity to make analytics more dynamic. Deep learning is a few advancements away from telling computers how to solve problems to training them how to solve it themselves, with no human input required.

The goal of creating deep learning systems is to make systems that are predictive, can adapt and generalize well, and improve continuously with new data. Deep learning systems are dynamic and don’t rely totally on hard business rules.

Cognitive Computing

Cognitive computing refers to systems that can learn on their own and perform human-like tasks by using machine learning techniques[xvi]. These self-learning systems receive initial instructions after which they keep learning using the data that’s fed into them. It can find hidden insights without programmers having to explicitly program it to look for specific patterns. These systems are designed for specific tasks in an intelligent way.

That’s why they are considered to be disruptive to many fields, including healthcare, legal, marketing, financial services and customer services. Cognitive systems can read signs in a very focused context to get a better idea of what’s really happening. For example, customer services reps can get assistance and data from automated systems robots that can help by taking calls.

Cognitive computing is much more than just text mining. One excellent example of cognitive computing is IBM’s Watson, which was able to understand and respond to questions in the program Jeopardy! Other applications like Siri are considered to be a start of cognitive computing systems. Clearly, there is a long, fruitful road ahead for this field.

Natural Language Processing

Natural Language Processing (NLP) is AI’s branch that aids systems in understanding, interpreting and manipulating language. The technology is drawn from different disciplines, including CS and computational linguistics. The main objective of cognitive computing is to fill the gap between computer understanding and human communication. Due to availability of powerful computers, huge amounts of data, advanced algorithms, and an ever-increasing interest in human-to-computer communications, language processing has evolved at a rapid pace in recent times.

The language of computers is comprised of long strings of 0’s and 1’s. It is largely incomprehensible to humans. Alexa is one example of NLP. It can ‘hear’ what we say and process that information before giving us the output. NLP works along with other AI elements including deep learning and machine learning. Businesses are using NLP to achieve better products and customer services such as extracting trends from feedback to transform relationships with customers.

NLP enables computers to hear speech, read text, interpret the input, and classify information by importance[xvii]. NLP systems are now able to process more data (language-based) than humans in a consistent and unbiased manner. From the social media to medical records, automating NLP tasks is the only way of efficiently analyzing speech and text data when such large volumes of data are available.

Human language is diverse and complex, and we can express ourselves in writing and verbally in infinite ways. There are thousands of languages each having its own dialects, syntax rules, grammar, slang, and terms. This creates the need to create systems that can resolve ambiguity and give structure to the information.

Many techniques are used to interpret human language, including machine learning, statistical, algorithmic, and rules-based approaches. NLP breaks down a specific language into smaller, elemental pieces. It then tries to understand relationships between different pieces and make sense of how these pieces work together. Different underlying tasks that are usually used in NLP systems include:

- Content categorization

- Topic discovery

- Topic modeling

- Contextual extraction

- Sentiment analysis

- Speech-to-text conversion

- Text-to-speech conversion

- Document summarization

- Machine translation

The main objective of NLP is to take input (raw language), and use algorithms and linguistics to transform the input data into something that’s meaningful and of value to the humans. Examples of NLP include investigative discovery to identify clues in detecting/solving crimes, content classification, social media analytics, virtual assistants, and spam filtering.

NLU (Natural Language Understanding) is a subfield of NLP and goes well beyond structural understanding. It can interpret intent, resolve ambiguity, and even generate human language. It is meant to understand the intended meaning and context in the same way we process language.

Technologies that Helped AI Pick Up the Pace

The Internet of Things

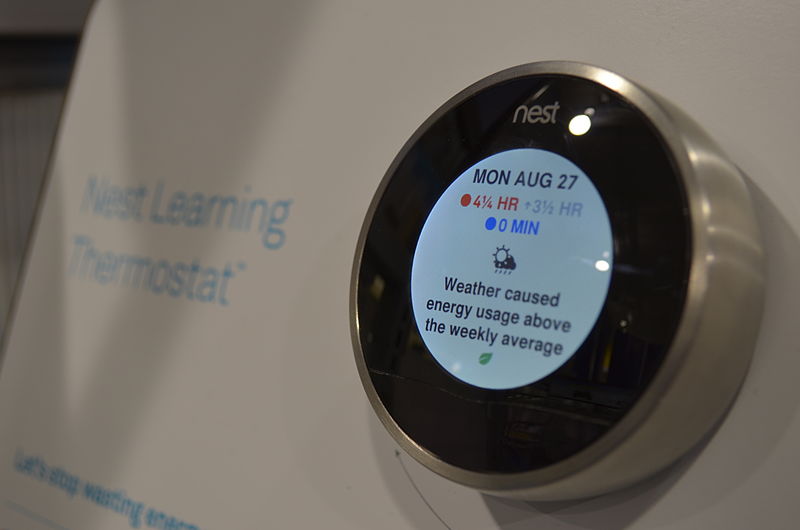

The Internet of Things (IoT) refers to the network of devices or physical ‘things’ embedded with software, sensors and other technologies[xviii]. These devices can be connected to each other through the internet and can range from everyday appliances like washing machines to complex industrial tools like smart power grids (IIoT, Industrial Internet of Things). Availability of low-power, low-cost sensor technologies, internet, cloud computing and AI have made IoT devices more accessible and affordable for the masses.

When combined, AI and IoT become AIoT in which AI works as a brain and the IoT devices work like the digital nervous system. IoT devices can capture a large amount of data from a variety of sources. Understanding and processing this amount of data is very difficult using traditional methods. AI helped realize the true potential of this massive amount of data and create intelligent machines that require little to no human interference to work.

Graphical Processing Units

GPUs have evolved from being just a graphics chip into essential components of different AI technologies, including machine learning and deep learning, which require powerful hardware to process large amounts of data. The training phase is considered to be the most resource intensive task, especially when the parameters are in millions or even billions.

A GPU allows running multiple operations at the same time instead of running tasks one after another. GPUs have dedicated, faster memory and are single-chip processors that can perform floating point operations much faster than a CPU, which makes them more suitable for both graphical and mathematical calculations[xix]. That’s the reason why cryptocurrency mining depends on GPUs, which is also the reason behind severe global shortage and sky-rocketing prices of GPUs.

Advanced Algorithms

Algorithms are step-by-step instructions for calculations, automated reasoning and data processing. Advanced algorithms play an important role in sifting through large amounts of data. Traditional algorithms are explicitly programmed instructions, but advanced algorithms used in AI machines allow them to learn on their own. Sophisticated Algorithms maximize data utility, facilitate effective analysis and power intelligent automation.

There are many types of AI algorithms that can be classified as follows [xx]:

Classification Algorithms (supervised machine learning): used for dividing subjected variables into classes and predicting the class for a specific input. For example, classification of emails allows for spam to be filtered out of a person’s main inbox. Some of the commonly used classification algorithms include Naive Bayes, Decision Tree, Random Forest, Support Vector Machines, and K Nearest Neighbors.

Regression Algorithms (supervised machine learning): Used for predicting the output based on input. Examples include predicting stock prices and the weather. Linear Regression, Lasso Regression, Logistic Regression, Multivariate Regression, and Multiple Regression Algorithm are among the most popular regression algorithms.

Clustering Algorithms (unsupervised learning): Used for segregating and organizing data into groups on the basis of similarity. For example, these algorithms are used in grouping all suspicious banking transactions based on some common properties. Commonly used clustering algorithms include K-Means clustering, Fuzzy C-means, Expectation-Maximization and Hierarchical Clustering.

APIs (Application Programming Interfaces)

APIs allow transmission of information between compatible software or systems. The main goals of using APIs is to improve efficiency and minimize data duplication. Instead of feeding the same data to different systems, APIs allow users to share information from one system with another.

AI-enabled systems require huge amounts of data to work efficiency. Feeding such large volumes of information using traditional methods is a time consuming and costly process. APIs simplify the process of feeding data into AI systems. For example, Google Prediction API learns from the training data provided by a user and can directly communicate with machine learning systems, eliminating the need to separately input training data[xxi].

Developers and AI researchers can use APIs to perform different tasks such as spam detection, sentiment analysis, purchase prediction and data classification. Wit.ai, a platform primarily used to build chatbots using NLP, is another example of how an NLP platform enables developers to integrate speech functionality to mobile and web apps. Some use cases includes adding a voice interface to smart cars, home automation, smart TVs, smartphones, wearables, and robots.

The Human Brain vs. Computers

Computers have evolved into powerful machines that can beat humans in chess and even Jeopardy! But do the breakthroughs in AI really mean computers have become better than a human brain? When comparing a human brain vs. a computer, it’s important to define a computer, which here refers to an average personal desktop computer. This makes it easier to compare the two in four key areas: processing speed, memory, storage, and energy efficiency.

The problem with this comparison is that it’s not possible to accurately quantify these things for a human brain, which means it’s just a comparison for the sake of it. A desktop computer usually has 1 Terabyte storage capacity, while a human brain has over a trillion neuron connections. This translates into exponentially bigger storage capacity and is estimated to be equivalent to around 1 petabyte or 1000 x 1TB[xxii].

Memory refers to the speed at which a brain or computer can recall information. Humans have a tendency to forget facts and information over time, but anything stored inside a computer is instantly accessible, at any time. However, a human brain can store and recall different types of memories, not just facts and numbers, and can also relate these memories. Computers are good at storing/remembering and accessing information, but they lack the ability to relate memories.

When it comes to energy efficiency, the human brain is the clear winner as it requires significantly less energy. A desktop computer uses around 100 watts, but a human brain only needs an equivalent of 10 watts. Due to these reasons it’s not easy to declare a clear winner. Computers have more raw processing power and precision, but they fail in things such as creativity, energy efficiency and relating to memories with other things.

Key Characteristics and Features of AI

Constantly moving goalposts and new developments have been reshaping AI for over 50 years. AI touches our world in both obvious and not-so-obvious ways. AI generally refers to ‘intelligent machines’ that can think and make decisions on their own, but there are some key characteristics [xxiii] that help define and better understand the technology.

Capacity to Adapt and Predict

Advanced algorithms help us discover patterns that might otherwise be invisible. They help us make predictions and adapt according to the inputs received. AI systems learn from these patterns similar to how we learn from practicing. Machine intelligence works behind the scenes of many of our everyday tasks, including text prediction and spelling correction, time and traffic estimates, and best route recommendation. At a larger scale are advanced systems such as fully autonomous self-driving cars and law applications that automatically flag documents as relevant.

Decision-making Capability

AI can improve productivity by delivering insights and augmenting human intelligence. The ability to analyze and understand data and gain new insights is the real power behind AI, which ultimately aids in making better decisions. This ability comes with a price. Massive volumes of data must be fed into the system for it to learn and make smarter decisions.

Continuous Learning

Machine learning is a subset of AI, while deep learning is a subset of machine learning. These technologies allow AI systems to continually learn from patterns. For example, digital assistants Siri, Google, and Amazon’s Alexa use advanced algorithms to build analytical models that can learn from trial and error. NLP is another example that continuously learns from the input and is being used in many applications including legal documents analysis.

Reactive

AI in its basic form is reactive and cannot exercise free will or make moral choices. It acts based on how it perceives a problem. Reactive AI chooses possible moves based on what’s in front of it and cannot ‘think’ on its own. Digital assistants can predict user actions, but they are limited to user choice and personal preferences to make recommendations, which makes many forms of AI in use today reactive and limited in functionality.

Forward-looking

The ability of AI systems to converse is expected to advance significantly in the coming years in key areas including regulation, security, research, law, and healthcare. AI visionaries believe that the best of what AI has to offer is yet to come. AI is already being used in a variety of industries, including healthcare, law, finance, marketing, and supply chain management. The key areas where significant advancement is expected in the future include behavioral analysis, fraud detection, workflow measurement in real time, document analysis, document review, e-discovery, predicting legal future outcomes, and classifying large amounts of data.

Perception and Motion

Thanks to the amount of data available today, AI has transformed into AI 2.0, referring to AI’s new capabilities in the areas of auditory and speech perception, perceptual processing, and learning. It’s used in different applications ranging from self-driving cars to Siri and Alexa. The technology has constantly evolved from being responsive to some simple questions to autonomous cars and complex forecasting models.

Data Ingestion

AI can analyze massive amounts of data from multiple sources. The exponential growth of data led to many breakthroughs in the field[xxiv]. Traditional software and databases are inefficient in dealing with such massive amounts of data. AI-enabled systems and IoT minimize the need for feeding data manually and can gather and analyze it automatically based on the previous learning.

Adaptive

AI is agile, efficient, and robust, making it significantly more adaptable than traditional software. Agility refers to the capacity of adjusting operational conditions of AI-enabled systems according to the workload, while efficiency refers to low use of resources. AI machines are able to complete tasks with algorithmic precision and these three traits make it adaptive to a variety of situations and applications.

Concurrent

Old technologies are limited when constructing complex interpretation or problem-solving systems, which are unable to adapt in changing situations. Complex problem solving requires means to gather, classify, represent, organize, store, and manipulate massive amounts of data, and incrementally adjust to new techniques and knowledge. AI allows developers and researchers to concurrently consider interactions as well as tradeoffs between conflicting requirements, which is becoming more and more difficult with current systems.

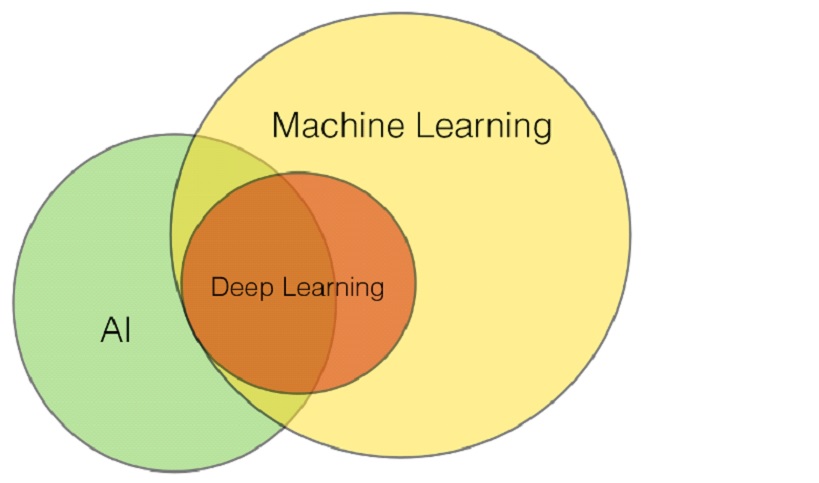

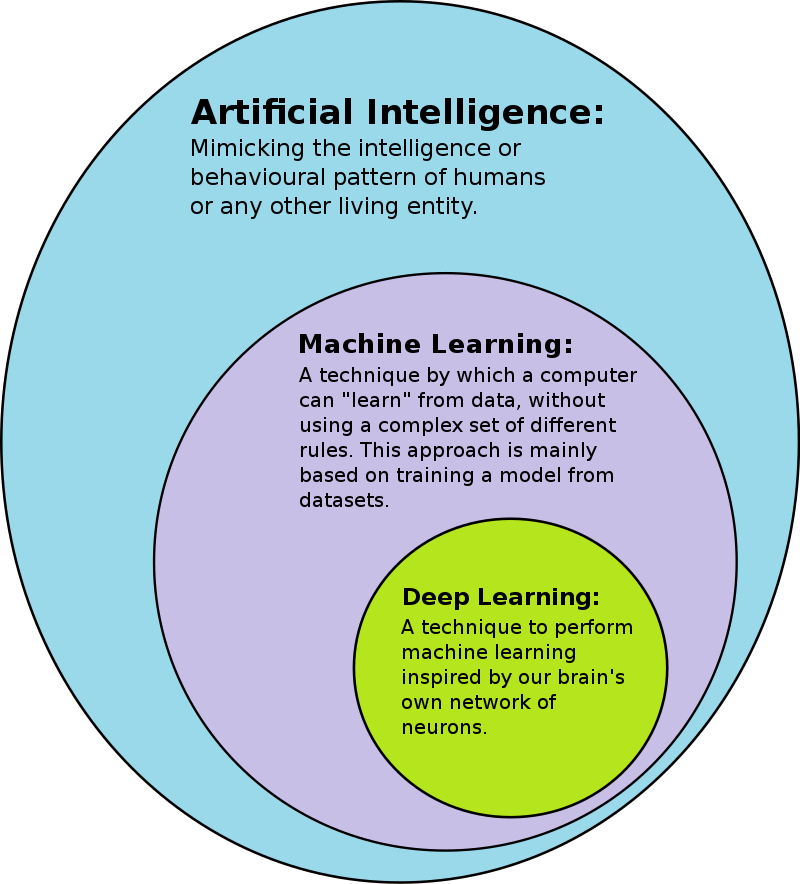

AI vs. Machine Learning vs. Deep Learning

Most of today’s Narrow AI is powered by advancements in the sub-domains of machine and deep learning. Machine learning is a subset of AI, while deep learning is a subset of machine learning[xxv]. AI is a broader field that aims to design systems that are able to ‘think’ and behave like a human. Machine learning is a specific area that deals with training a machine to learn and automate analytical modeling.

Machine Learning algorithms use both labeled and unlabeled datasets (supervised vs. unsupervised learning). It saves programmers from having to write millions of lines of code to program everything related to performing a certain task.

Machine Learning Methods

- Learning Problems

- Supervised Learning (classification and regression)

- Unsupervised Learning (Unsupervised clustering, density estimation, visualization, projection)

- Reinforcement Learning (learning using feedback, numerical reward signals)

- Hybrid Learning Problems

- Semi-supervised Learning (data contains much more unlabeled examples than labeled examples)

- Self-supervised Learning (e.g. autoencoders, both input and target output are provided to the model)

- Multi-instance Learning (supervised learning in which groups of examples are labeled)

- Statistical Inference

- Inductive Learning (using evidence or specific cases to determine outcomes)

- Deductive Inference (using general rules in determining specific outcomes)

- Transudative Learning (using specific examples to determine specific outcomes)

Machine Learning Techniques

- Multi-task learning: Models are trained on multiple related tasks in order to improve performance

- Active Learning (Passive Supervised Learning): The model can query a human operator to eliminate ambiguity

- Online Learning: Data is used to update the best possible future predictor and made available in a sequential order

- Transfer Learning: A model is trained for a specific task before being used for other related tasks

- Ensemble Learning: Predictions from two or more modes are combined for better performance

Deep learning uses layers of processing units to learn complex patterns on the basis of huge amounts of data and tries to mimic a human brain. Deep learning uses neural networks that have better interconnectivity and more layers. Deep learning is considered to be the next big thing in the field of machine learning and there are various types of deep learning algorithms being used including[xxvi]:

- Convolutional Neural Networks: multiple layers, used mainly for object detection and image processing

- Recurrent Neural Networks: commonly used for time-series analysis, image captioning, NLP, machine translation and handwriting recognition

- Long Short-term Memory Networks: type of RNNs, can memorize and learn long-term dependencies, mainly used in time-series prediction, music composition, speech recognition and pharmaceutical development

- Generative Adversarial Networks: consist of generator and discriminator, used for scientific purposes including dark matter research, simulating gravitational lensing, and improving astronomical images

- Radial Basis Function Networks: feedforward neural networks, consist of an input, hidden layers and an output layer, used for time-series prediction, regression and classification

- Multilayer Perceptrons: feedforward neural networks, multiple perceptron layers, fully connected input-output layers, used for image recognition, speech-recognition and machine translation

- Self-Organizing Maps: designed to understand high-dimensional data which humans cannot visualize easily

- Deep Belief Networks: generative models, layers are connected, used for video/image recognition and motion capturing

- Restricted Boltzmann machines: building blocks of Deep Belief Networks, used for topic modeling, dimensionality reduction, regression, feature learning, classification and collaborative filtering

- Autoencoders: feedforward neural network with identical input and output (replicate input layer data to output layer), used for popularity prediction, pharma discovery and image processing

Fields of Focus

Research in the field of Artificial Intelligence is mainly focused on five key areas[xxvii].

Knowledge

Refers to the ability of a program to possess and represent knowledge and categorize different entities and their relationships with each other. The world around us has certain entities, situations, and facts associated with it, which are interconnected with each other.

Reasoning

Reasoning is the ability to solve problems and derive beliefs and conclusions using logical reasoning based on the input data. Reasoning can be deductive, inductive, or abductive, which work as covered in machine learning algorithms.

Planning

AI machines are programmed to set and achieve specific goals, which requires specifying a future, a state that is desirable and actions needed to achieve that state.

Communication

Refers to the ability of AI machines to understand written/spoken language, which allows them to communicate with humans.

Perception

Using sensory outputs to make deductions. AI systems need the ability to identify, organize and interpret sounds, images and other sensory data to solve problems and make decisions.

AIaaS (AI as a Service)

Similar to SaaS (Software as a Service), IaaS (Infrastructure as a Service) and PaaS (Platform as a Service), many vendors offer AI components to researchers as subscription. This saves individuals and businesses from having to heavily invest in the infrastructure and saves them software and maintenance costs. The model works great for researchers and developers trying to experiment with AI before making a long-term commitment. Some prominent players in the AIaaS market include IBM Watson Assistant, Amazon AI, Google AI, and Microsoft Cognitive Services.

Conclusion: Why Should You Care?

AI has come a long way since the last 60 years and has become one of the hottest buzzwords. Some people love AI, while others are highly skeptical of what it might do in the future. The important thing is to understand its current and future implications without being paranoid about advancements in the field. We regularly see media warning that AI could take away all our jobs and replace us in the workforce.

However, AI has its limitations. Neither can it take all our jobs nor is it a panacea for our biggest problems. AI is going to integrate into our lives pretty soon, which means we need to understand how it will impact our routine lives. Instead of replacing all our jobs, AI will become a part of many of our jobs and augment work by doing the mundane tasks.

This allows us to focus more on creative tasks that require innovation and collaboration. In other words, there is no substitute to creativity and effective communication and AI is not going to replace these anytime soon. Offloading administrative, and repetitive tasks to machines will allow human employees to work more efficiently, which makes AI a tool instead of a threat to our jobs.

AI is already impacting the health sector and is expected to have an even bigger impact and save lives in the near future. From practitioner-patient interactions to finding hidden patterns, AI is helping researchers cure some of the most challenging diseases. AI can crawl large datasheets in no time and detect patterns much faster. This makes it possible to recognize patterns that otherwise elude human detection, which ultimately results in more effective and quicker discovery of new treatments and drugs.

AI is also going to have a profound effect on our daily lives even if we don’t realize it. AI is expected to drive different functions, including supply chains and dictate how you interact with technology. Virtual assistants like Siri and Alexa and driverless cars are just the beginning of a new era in which we will start treating AI and technology more like humans. Whether we like it or not, AI is on its way to driving the 4th industrial revolution, so now is the right time to embrace it to make sure no one is left behind.

[i] “Artificial Intelligence”. Retrieved from https://builtin.com/artificial-intelligence

[ii] “AI – IBM Cloud Education (3 June 2020)”. Retrieved from https://www.ibm.com/cloud/learn/what-is-artificial-intelligence

[iii] “Overview of the Key Technologies of Artificial Intelligence” (11 April 2020). Retrieved from https://towardsdatascience.com/overview-of-the-key-technologies-of-artificial-intelligence-1765745cee3

[iv] “What is Artificial Intelligence? How does AI work, Types and Future of it?” (11 February 2021). Retrieved from https://www.mygreatlearning.com/blog/what-is-artificial-intelligence/#TypesofAI

[v] “What are the 3 types of AI? A guide to narrow, general, and super artificial intelligence”. Retrieved from https://codebots.com/artificial-intelligence/the-3-types-of-ai-is-the-third-even-possible

[vi] “Types of Artificial Intelligence”. Retrieved from https://www.javatpoint.com/types-of-artificial-intelligence

[vii] “The 3 types of AI”. Retrieved from https://www.bgp4.com/2019/04/01/the-3-types-of-ai-narrow-ani-general-agi-and-super-asi/

[viii] “7 Types Of Artificial Intelligence”. Retrieved from https://www.forbes.com/sites/cognitiveworld/2019/06/19/7-types-of-artificial-intelligence/?sh=29d32989233e

[ix] “4 Types of Artificial Intelligence” (8 June 2020). Retrieved from https://www.bmc.com/blogs/artificial-intelligence-types/

[x] “Understanding the Four Types of Artificial Intelligence”. Retrieved from https://www.govtech.com/computing/understanding-the-four-types-of-artificial-intelligence.html

[xi] “Machine Learning. What it is and why it matters”. Retrieved from https://www.sas.com/en_us/insights/analytics/machine-learning.html

[xii] Neural Networks – IBM Cloud Education (17 August 2020). Retrieved from https://www.ibm.com/cloud/learn/neural-networks

[xiii] “Neural Networks – What they are & why they matter”. Retrieved from https://www.sas.com/en_us/insights/analytics/neural-networks.html

[xiv] “Computer Vision”. Retrieved from https://www.sas.com/en_us/insights/analytics/computer-vision.html

[xv] “Deep Learning – IBM Cloud Education” (1 May 2020). Retrieved from https://www.ibm.com/cloud/learn/deep-learning

[xvi] “An executive’s guide to cognitive computing”. Retrieved from https://www.sas.com/en_us/insights/articles/big-data/executives-guide-to-cognitive-computing.html

[xvii] “Natural Language Processing – IBM Cloud Education (2 July 2020)”. Retrieved from https://www.ibm.com/cloud/learn/natural-language-processing

[xviii] “What is the Internet of Things?”. Retrieved from https://www.zdnet.com/article/what-is-the-internet-of-things-everything-you-need-to-know-about-the-iot-right-now/

[xix] “Deep Learning with GPUs”. Retrieved from https://www.run.ai/guides/gpu-deep-learning/

[xx] “Types of Artificial Intelligence Algorithms You Should Know”. Retrieved from https://www.upgrad.com/blog/types-of-artificial-intelligence-algorithms/

[xxi] “How API and Artificial Intelligence can be complementary?”. Retrieved from https://apifriends.com/api-management/api-and-artificial-intelligence/

[xxii] “How does the human brain compare to a computer?”. Retrieved from https://www.crucial.com/blog/technology/how-does-the-human-brain-compare-to-a-computer

[xxiii] “What are the Key Qualities of AI?”. Retrieved from https://blog.rossintelligence.com/post/what-are-the-key-qualities-of-ai

[xxiv] “Features of Artificial Intelligence – The New Age Electricity”. Retrieved from https://data-flair.training/blogs/features-of-artificial-intelligence/

[xxv] “AI vs. Machine Learning vs. Deep Learning vs. Neural Networks: What’s the Difference? – IBM Cloud Education”. Retrieved from https://www.ibm.com/cloud/blog/ai-vs-machine-learning-vs-deep-learning-vs-neural-networks

[xxvi] “Different types of Deep Learning models explained”. Retrieved from https://roboticsbiz.com/different-types-of-deep-learning-models-explained/

[xxvii] “Why is AI important?”. Retrieved from https://www.stateofai2019.com/chapter-2-why-is-ai-important/